Building a Toy Container Vulnerability Scanner

After doing some work around container vulnerability scanning recently, I realized that I had a pretty incomplete mental model of how scanners like Snyk or AWS Inspector actually work. A couple questions came up for me, the most pressing being: why can’t I use AWS Inspector to scan the otel/opentelemetry-collector image we use as a base for deploying the OTel collector in prod?

The answer was pretty simple: because the main stage of the collector uses scratch as a base, and Inspector’s ECR integration doesn’t support scratch base images. But I also learned that I don’t know all that much about container scanning and decided it was worth investing some more time to improve that situation. Doing more research, I realized that vulnerability scanning is a surprisingly straightforward process and this was a good opportunity to get some hands on experience working directly with Docker images.

This post explains what I did to build a “toy” vulnerability scanner, and what I learned. I used Go but my hope is that the code is simple enough for non-Go programmers to follow, and I’ve tried to link to as many sources as possible to make it easier to follow the decisions made. The completed project is on GitHub at jemisonf/toy-scanner.

But first:

Some background

Understanding what actually constitutes a container image1 is really important to understanding container scanning. If you’re already familiar with image manifests and layers, feel free to click here to go to the next section.

Container images, as specified in the Open Container Initiative image-spec document, are made up of “layers” of files, with a “manifest” document that describes what layers exist in the image.

You can think of individual layers as just a bundle of files compressed with tar and gzip. Layers correspond 1:1 with lines in your Dockerfile; the line

ADD package.json package-lock.json .

will create a single layer containing those two files at whichever location they exist at in your container’s filesystem.

When you run a container, Docker applies those layers one at a time to the container filesystem in the order that they were created. So by inspecting each layer in a image, you can see every file that will appear in the container when it’s started.

Manifests are just metadata that tells container clients what layers exist for a given image. You don’t need to know a ton about them for the purposes of this post, just that they can tell us how to find the layers for the image we’re scanning. You can see the full specification in the OCI spec.

It’s also important to mention that manifests are just JSON files, and layers are essentially just tarballs. There are software libraries I’ll talk about in the next section that make it substantially easier to interact with container images, but a running Docker daemon isn’t required and even the libraries are optional – it would be possible to implement this toy scanner in a shell script using curl and tar if you were into that kind of thing.

How do you scan a container for vulnerabilities?

For this section, I wanted to be careful to avoid reinventing the wheel. So I did some research on existing container scanners to see what information is available about their approach to scanning containers. Snyk, a leading container security product, is unfortunately not open source and I didn’t find readily available resources about how they implement their product. But Clair, developed by Red Hat subsidiary Quay, is open source, and has actually published an overview of their architecture and implementation here: https://quay.github.io/claircore/.

Clair’s architecture has two key components:

- Indexers continuously scan for new image layers, and can pull in an image to identify which packages are installed in the image, and then generate a “report” that can be consumed by other Clair components

- Matchers, which take reports produced by indexers and compare the packages in the report to public vulnerability databases to identify if a package in a report contains any vulnerabilities

A lot of engineering work in Clair and other projects goes into supporting continuous scanning of container registries and checking for a wide range of operating system and language dependencies. So I added some constraints to make my life a little easier:

- The toy scanner should just be a CLI tool that scans a single image on-demand

- The scanner will only support Alpine packages

I picked Alpine because my impression from the Clair source code was that Alpine is one of the simpler distributions to scan for installed packages. At the same time, learning to scan Alpine will teach you most of what you’d need to know about container scanning in general because most individual scanners tend to follow a similar set of patterns.

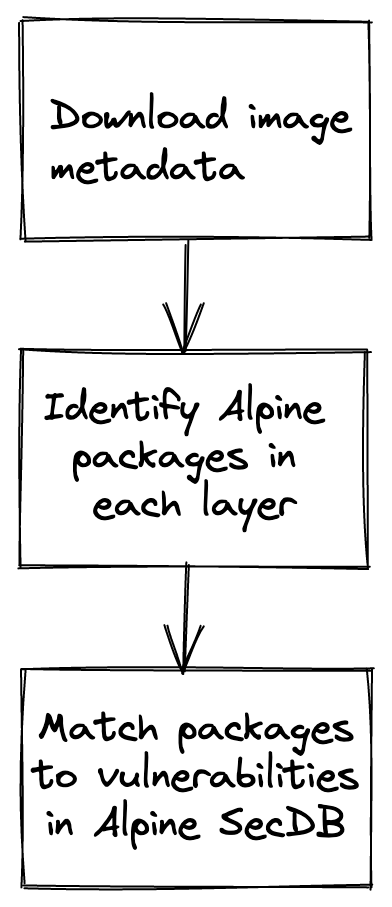

With those restrictions in place, our much-simplified scanner architecture looks like:

Scanner architecture

Without much further ado, let’s get to it!

Step 1: setup and image metadata

I used Go for building the components of the scanner so I could use Google’s go-containerregistry module, which makes working with remote container images extremely simple. Reading an image manifest just requires parsing the image tag with name.ParseReference and then downloading the image with remote.Image. The collected code looks like:

func main() {

var image string

flag.StringVar(&image, "image", "", "Image to scan")

flag.Parse()

ref, err := name.ParseReference(image)

if err != nil {

fmt.Printf("Error parsing image name: %s\n", err.Error())

os.Exit(1)

}

docker_image, err := remote.Image(ref)

if err != nil {

fmt.Printf("Error fetching image: %s\n", err.Error())

os.Exit(1)

}

manifest, err := docker_image.Manifest()

if manifest != nil {

// do something

}

}

Having manifest and docker_image gives us all the information we need for the next section. manifest has a Layers field we can use to find the digest2 for each layer of the image, which we can then use to get a Layer object with the LayerByDigest field on docker_image.

Step 2: identifying packages

Before we start: I would like to say that I am not an expert at Alpine, vulnerability databases, or Linux package management in general, and that I can vouch for the educational value of this section in regards to learning how vulnerability scanners work but I can’t promise there aren’t some errors as I start to get into specifics.

For this step, I had to do some research on actually scanning Alpine for vulnerabilities. I started at the end, with asking what the minimum information you’d need to actually match a package to a vulnerability was. Looking at Alpine SecDB, which is listed as Clair’s vulnerability database for Alpine, I found that the three most relevant pieces of data are: Alpine distro version, package name, and package version. Here’s how I encoded that information:

type AlpineReport struct {

Packages []AlpinePackage

Version string

}

type AlpinePackage struct {

Name string

Version string

}

The term “report” here is borrowed from Clair, where indexers produce reports that are consumed by vulnerability matchers.

The outline of what I called the AlpineScanner method looks like this:

func AlpineScanner(manifest v1.Manifest, image v1.Image) (*AlpineReport, error) {

layers := []v1.Layer{}

for _, layer_desc := range manifest.Layers {

layer, err := image.LayerByDigest(layer_desc.Digest)

if err != nil {

return nil, fmt.Errorf("Error fetching layer %s: %w", layer_desc.Digest, err.Error())

}

layers = append(layers, layer)

}

packages := []AlpinePackage{}

var version string

for _, layer := range layers {

// scan layer

}

return &AlpineReport{

Packages: packages,

Version: version,

}, nil

}

In short: collect the metadata for each layer based on its digest, then iterate over the layers one by one and collect the packages for each layer into a report containing the full list of packages in the image.

That leaves left two things to implement while scanning the layers: find the Alpine distro version, and find each package installed.

First, we have to figure out how to scan individual files in each layer. I had to do some research on this, but it turned out to be pretty straightforward. layer.Uncompressed() will return an io.ReadCloser representing a readable stream of the compressed tarball for the layer, and we can then pass that into tar.NewReader and get a tar.Reader object that we can use to iterate over individual files:

reader, _ := layer.Uncompressed()

tar_reader := tar.NewReader(reader)

header, err := tar_reader.Next()

for header != nil && err == nil {

// process the file

// ...

// now advance to the next file

header, err = tar_reader.Next()

}

Calling tar_reader.Next() here will return a header object for each subsequent file in the tarball, with we can use to get the name of the file as well as reading its contents.

One reason I picked Alpine is that the next steps are really simple. Reading through Clair’s implementation, there are two files we need to look for:

- A file in

/lib/apk/db/installedthat has a list of every package installed on the system - A file in

etc/os-releasethat contains the distribution version

When we find /lib/apk/db/installed, we need to do a little additional parsing. If you run docker run alpine cat /lib/apk/db/installed you can see a full example, but here’s a single package for reference:

C:Q1tSkotDvdkl639V+pj5uFYx3AGIQ=

P:libssl1.1

V:1.1.1n-r0

A:aarch64

S:207620

I:536576

T:SSL shared libraries

U:https://www.openssl.org/

L:OpenSSL

o:openssl

m:Timo Teras <timo.teras@iki.fi>

t:1647383879

c:455e966899a9358fc94f5bce633afe8a1942095c

D:so:libc.musl-aarch64.so.1 so:libcrypto.so.1.1

p:so:libssl.so.1.1=1.1

r:libressl

F:lib

R:libssl.so.1.1

a:0:0:755

Z:Q1jtZ7ec5Jx9TJ+IUk5mJnXnn+Gd4=

F:usr

F:usr/lib

R:libssl.so.1.1

a:0:0:777

Z:Q18j35pe3yp6HOgMih1wlGP1/mm2c=

Each one of these package blocks is separated by a double newline, and then each line within the block represents a different field. Because I couldn’t find documentation about the details of the format, my implementation borrows really heavily from Clair’s Alpine package scanner:

if header.Name == "lib/apk/db/installed" {

contents, _ := io.ReadAll(tar_reader)

entries := bytes.Split(contents, []byte("\n\n"))

for _, entry := range entries {

lines := bytes.Split(entry, []byte("\n"))

var name, version string

for _, line := range lines {

if len(line) == 0 {

continue

}

switch line[0] {

case 'P':

name = string(line[2:])

case 'V':

version = string(line[2:])

}

}

if name != "" && version != "" {

packages = append(packages, AlpinePackage{Name: name, Version: version})

}

}

}

I’m deliberately dropping a bunch of fields here from the package block to keep the implementation simpler. I suspect that means there are edge cases this code might not handle, but I’m not super concerned about that since my intent here is not to write an airtight vulnerability scanner.

Next, we need to find the Alpine version used by the container. This is straightforward enough to do just by checking /etc/os-release, a standard across Linux distributions for where the distribution version is stored. As an example:

$ docker run alpine cat /etc/os-release

NAME="Alpine Linux"

ID=alpine

VERSION_ID=3.15.1

PRETTY_NAME="Alpine Linux v3.15"

HOME_URL="https://alpinelinux.org/"

BUG_REPORT_URL="https://bugs.alpinelinux.org/"

I don’t think that just relying on VERSION_ID here will necessarily cover every edge case, but like with the package versions that should be ok for the purposes of this tool. Here’s the implementation:

if header.Name == "etc/os-release" {

contents, _ := io.ReadAll(tar_reader)

lines := bytes.Split(contents, []byte("\n"))

for _, line := range lines {

if strings.Contains(string(line), "VERSION_ID") {

fmt.Sscanf(string(line), "VERSION_ID=%s", &version)

}

}

}

With that done, we can print out the return from the scanner and get something like:

{Packages:[{Name:musl Version:1.2.2-r7} {Name:busybox Version:1.34.1-r4} {Name:alpine-baselayout Version:3.2.0-r18} {Name:alpine-keys Version:2.4-r1} {Name:ca-certificates-bundle Version:20211220-r0} {Name:libcrypto1.1 Version:1.1.1n-r0} {Name:libssl1.1 Version:1.1.1n-r0} {Name:libretls Version:3.3.4-r2} {Name:ssl_client Version:1.34.1-r4} {Name:zlib Version:1.2.11-r3} {Name:apk-tools Version:2.12.7-r3} {Name:scanelf Version:1.3.3-r0} {Name:musl-utils Version:1.2.2-r7} {Name:libc-utils Version:0.7.2-r3}] Version:v3.15}

Step 3: matching packages and vulnerabilities

Let’s start with some data structures. I relied pretty heavily on the Alpine SecDB data format to figure out how to structure the data in the program. You can see an example here.

Based on that, the return value we’re looking for would like something like:

type Vulnerability struct {

CVEs []string

PackageName string

Version string

}

It’s also handy to have a partial data structure to unwrap the SecDB data into. I used three structs for that:

type SecDBReport struct {

Packages []SecDBPackage

}

type SecDBPackage struct {

Pkg SecDBPkg

}

type SecDBPkg struct {

Name string

Secfixes map[string][]string

}

That just leaves some setup to do in the actual method, specifically:

- Compute the major.minor version of the distro from the major.minor.patch version we already have

- Use that to fetch the vulnerability document from SecDB

- Unwrap that value into a

SecDBReportobject

With some error handling omitted, that looks like:

func AlpineMatcher(report AlpineReport) ([]Vulnerability, error) {

vulnerable_packages := []Vulnerability{}

majorMinorVersion := semver.MajorMinor("v" + report.Version)

client := http.Client{}

secDBURL, _ := url.Parse(fmt.Sprintf("https://secdb.alpinelinux.org/%s/main.json", majorMinorVersion))

res, _ := client.Do(&http.Request{

Method: "GET",

URL: secDBURL,

})

responseBytes, _ := io.ReadAll(res.Body)

var contents SecDBReport

json.Unmarshal(responseBytes, &contents)

// check vulnerabilities here

return vulnerable_packages, nil

}

To actually match packages to vulnerabilities, we can compare every installed package to every SecDB entry and see if the installed version matches any CVEs:

for _, installed_package := range report.Packages {

for _, secdb_package := range contents.Packages {

if secdb_package.Pkg.Name == installed_package.Name {

if CVEs, ok := secdb_package.Pkg.Secfixes[installed_package.Version]; ok {

vulnerable_packages = append(vulnerable_packages, Vulnerability{

PackageName: installed_package.Name,

CVEs: CVEs,

})

}

}

}

}

Testing it out with go run . -image alpine you get . . . no vulnerabilities found. Which is actually expected – Alpine is a small distribution with good security hygiene, so the latest tag typically won’t have any issues to find.

Can we test it with a known-bad image to make sure we aren’t getting false negatives? I had a surprising (in a good way) amount of difficulty doing this – Alpine doesn’t have any of the vulnerable packages I looked for in its package index, and I’m not sure where to look for an old image that might contain the vulnerable packages. So I’ll, uh, leave further testing as an exercise to the reader.

Conclusion

I had a couple of fairly important takeaways about container vulnerability scanners while working on this that I think are worth sharing, because I suspect they’re related to common misconceptions about container scanning:

-

Container scanners don’t require any kind of special manifest to identify installed packages. They just read the files in the image; there’s no extra magic happening to make scanning possible.

-

Container scanners do need packages to be installed in a standardized way. As we saw when building the Alpine scanner, anything not installed through

apkwould not have been caught by our scanner.With a normal scanner, you can assume it will also catch anything installed with standard language tooling like

pipornpmas well, but you should not assume that something downloaded directly can be scanned. -

Container scanners don’t operate on “your” code at all. They won’t catch logic errors or previously-unknown vulnerabilities in your implementation.

Running static analysis of your code would be possible in theory but it isn’t a standard practice for container scanners, and it is not possible to do anything that requires dynamically executing code.

-

Container scanners are based on reported vulnerabilities so the value of a scanner is going to depend partly on how good a language or distribution is about identifying and publishing vulnerabilities.

-

Container scanners are for trusted images. Bypassing a container scanner to ensure that an image contains an insecure binary would be trivial, and attackers have various tools to compress layers together to make it even more difficult to detect that a layer contains malicious code.

That means that your scans are useful if you can trust that the images you use are installing packages in a standardized way and otherwise not trying to decieve you about their contents. The scanner protects you from yourself, not from other people.

Considering all those points together, it really helps emphasize that container scanning has an important but limited role to play in making sure that trusted container images are using up-to-date dependencies without known security vulnerabilities, but that scanning doesn’t replace other security best practices. I also learned a lot about interacting directly with container images and I think this is a neat example of how much you can do with an image without running a Docker daemon at all.

-

Whether or not we’re talking about a “Docker” image is an interesting question. Early in the days when containers were becoming a mainstream technology, Docker containers and “containers” were essentially synonymous. But now, Docker is no longer the only way to run containers or even the most popular; containerd is the container runtime that underlies a lot of managed container services, the Kubernetes project is removing Docker entirely, and you can find a number of container building services like buildah or ko that can build OCI-compliant container images without a Docker daemon. I’m generally going to try to use the term “container” instead of “Docker container” in this post, and you can assume everything mentioned here refers to OCI containers and is not Docker exclusive. ↩︎

-

The the digest of a layer is a hashed value that can be used to uniquely identify the layer, and that is generated based on the contents of the layer.

sha256is the most common method I have seen for generating digests. See the OCI descriptor specification for more detail. ↩︎